SDXL-Lightning: quick look and comparison

With SDXL-Lightning you can generate extremely high quality images using a single step.

Introduction

SDXL-Lightning (paper) is a new progressive adversarial diffusion distillation method created by researchers at ByteDance (the company that owns TikTok), to generate high quality images in very few steps (hence lightning).

This proposal takes inspiration and previous work from SDXL Turbo and LCM-LoRA, adding a series of improvements to eliminate the main limitations of these methods.

SDXL-Lightning is not distributed in a single multi-step model, but there is a checkpoint optimized for each use. An experimental checkpoint is distributed to perform inference in a single step, in addition to checkpoints for 2, 4 and 8 steps. Of all these checkpoints you can download the full model with all components, just the U-Net model or a LoRA module to use SDXL-Lightning with any other Stable Diffusion XL model (except the 1-step checkpoint where the LoRA module is not available).

In this article we are going to see how it works and what improvements it introduces, as well as a comparison with other models in its category.

You can test the model in the following spaces:

- huggingface (8, 4, 2 and 1 step)

- replicate (4 steps)

- fastsdxl (4 steps)

- huggingface (2 steps)

Methodology

In order not to repeat the whole process again, you will find the methodology used for the tests in this article. For all tests I have used the following prompts and seeds:

- Python

queue = []

# Food

queue.extend([{

'prompt': 'food photography, cheesecake, blueberries and jam, cinnamon, in a luxurious Michelin kitchen style, depth of field, ultra detailed, natural features',

'seed': 78934567,

}])

# Portrait

queue.extend([{

'prompt': 'close up, woman, headdress, neon iridescent tattoos, snow forest, intricate, 8k, cinematic lighting, volumetric lighting, 8k',

'seed': 55986344589,

}])

# Animal

queue.extend([{

'prompt': 'a fish made out of dendrobium flowers, ultrarealistic, highly detailed, 8k',

'seed': 501798843,

}])

# Macro

queue.extend([{

'prompt': 'macro photography of beautiful nails with manicure, painting, oil paints, gold splashes, professional color palette',

'seed': 1963386471,

}])

# Text

queue.extend([{

'prompt': 'video game room with a big neon sign that says "arcade", cinematic, 8k, natural lighting, HDR, high resolution, shot on IMAX Laser',

'seed': 1138562738,

}])

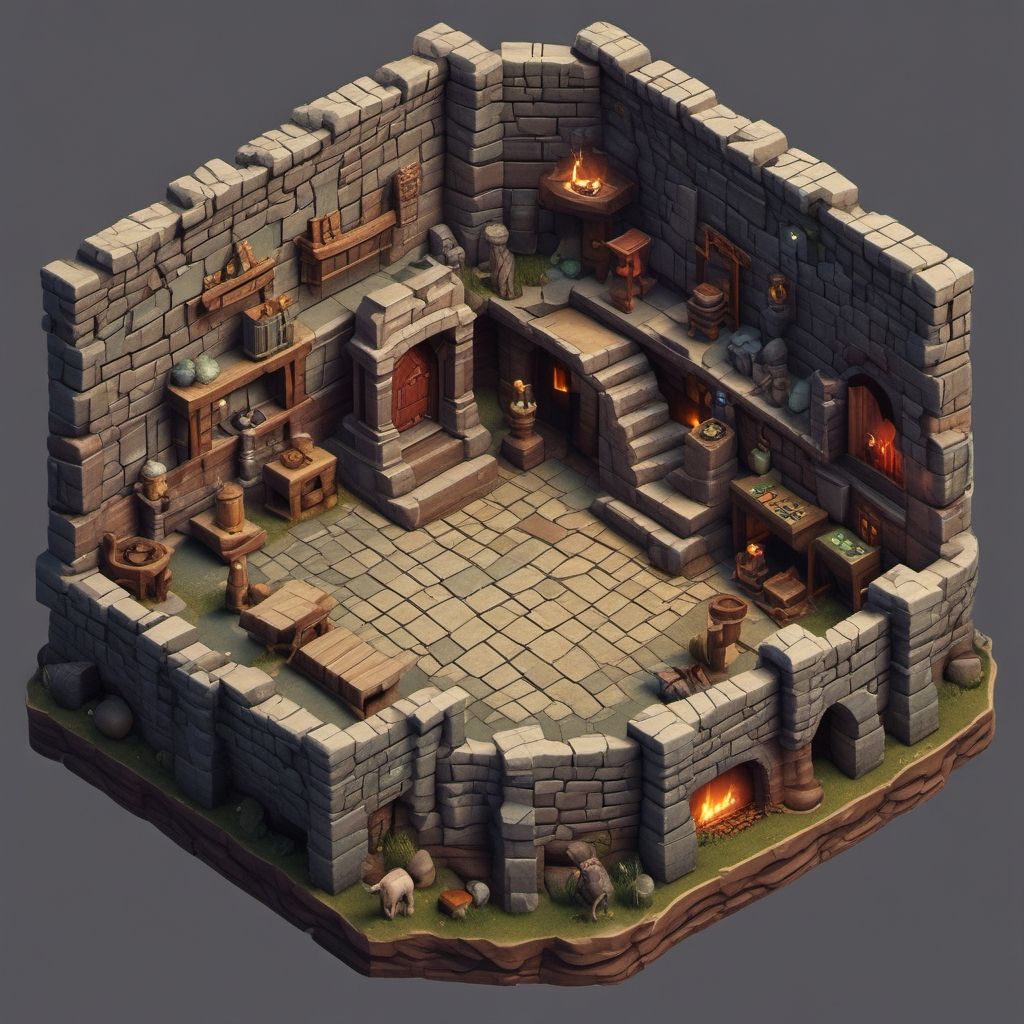

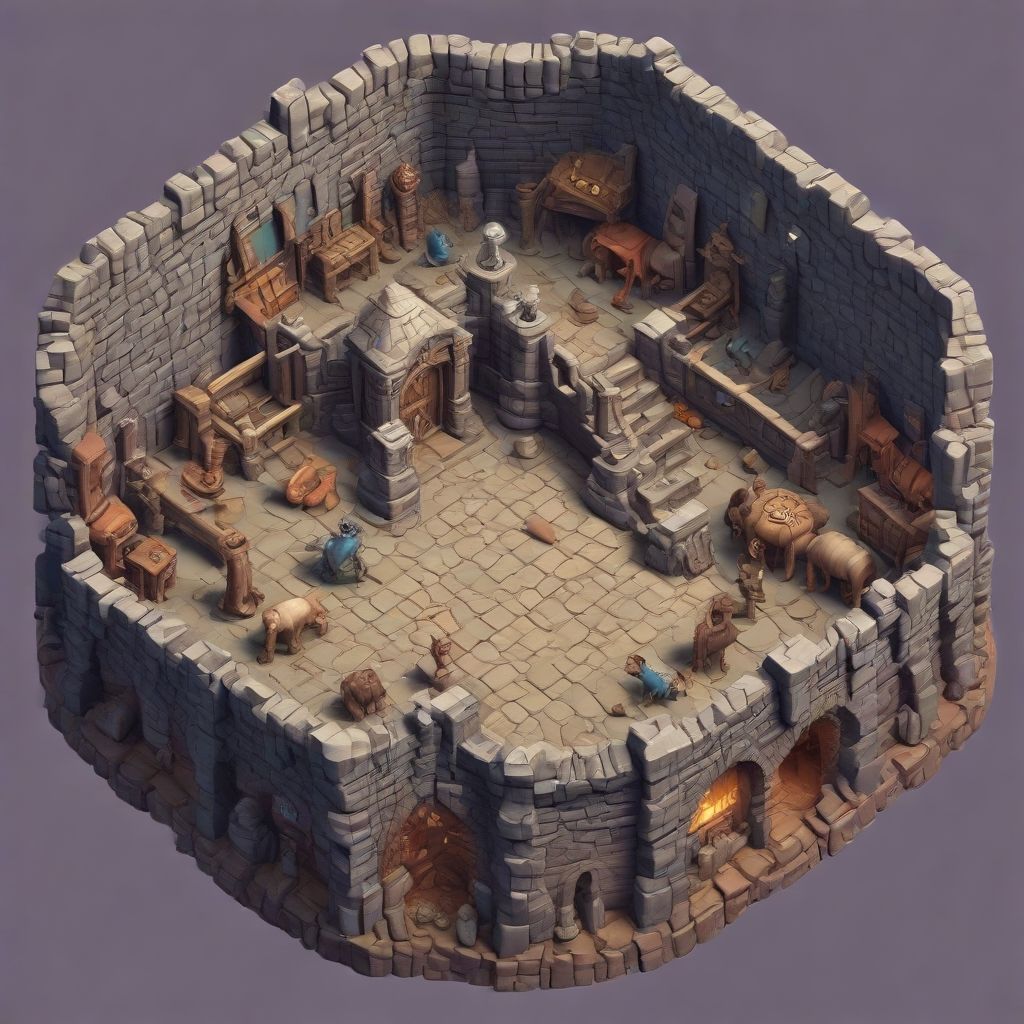

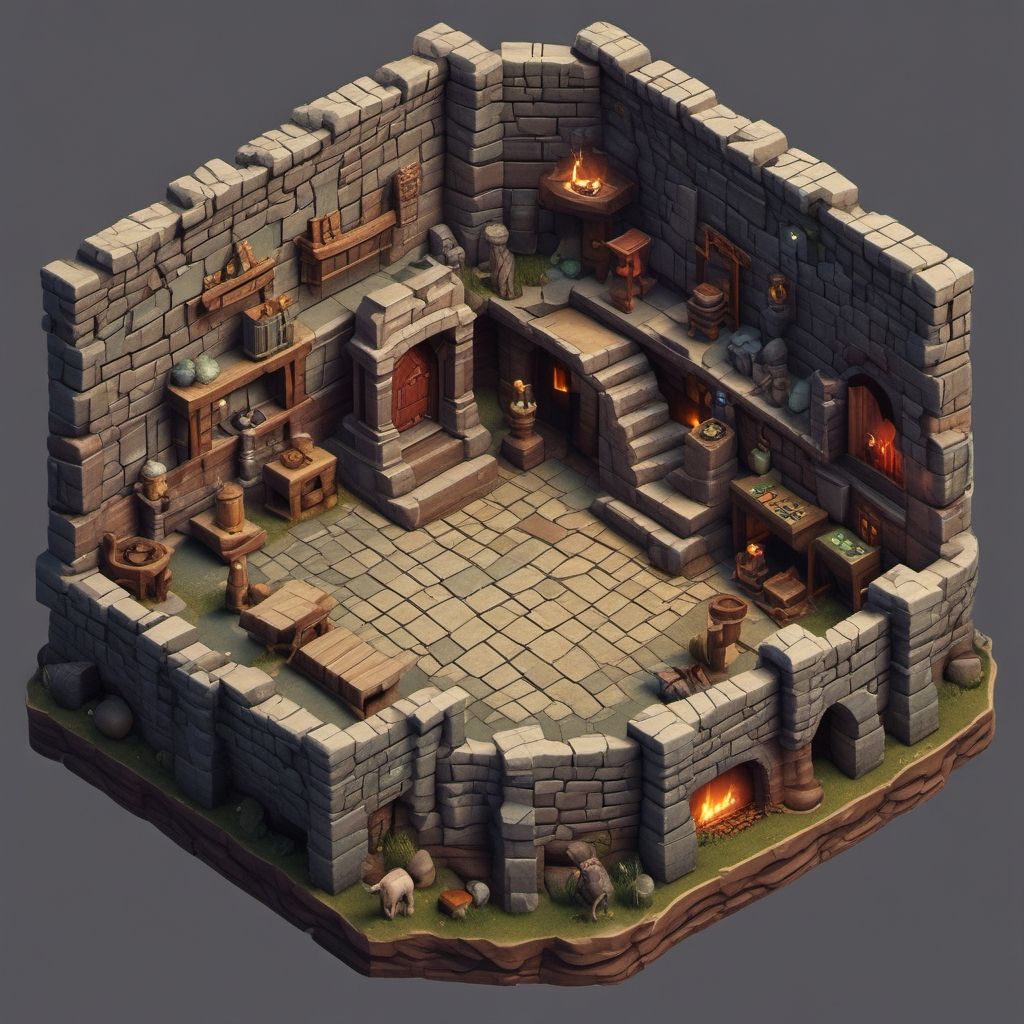

# 3D

queue.extend([{

'prompt': '3d isometric dungeon game',

'seed': 3812179903,

}])SDXL-Lightning, SDXL Turbo and LCM-LoRA do not use negative prompt or CFG value. These methods already used a CFG value during their training, so there is no need to use it again as it would reduce the quality of the result.

To get an idea of the results we can obtain, these are the images generated with SDXL-Lightning in 4 steps:

Remember that in the article "Ultimate guide to optimizing Stable Diffusion XL" you will find a lot of optimizations to decrease the memory usage of SDXL-Lightning and to increase its speed even more.

The images you will see in the article are displayed with a maximum size of 512x512 for easy reading, but you can open the image in a new tab/window to view it in its original size.

You will find all the tests in individual files inside the blog repository on GitHub.

Let's get started!

Knowledge distillation

It is known as knowledge distillation the process in which a large model transfers its knowledge to a smaller one.

When we perform the inference process in Stable Diffusion XL over 50 steps, what the U-Net model does is to remove noise gradually to get closer to the desired result. This approach is slow and not very efficient as it takes quite a few steps. If we try to reduce the number of steps to 2 or 4, the result will be disastrous. It's like playing golf, it's easier to win in 50 strokes than in 2.

And this is where this process comes into play.

Progressive distillation

As in SDXL Turbo, the progressive distillation technique has been used when training SDXL-Lightning. This technique consists of using a teacher/student architecture (paper) to make the model learn to display results without having to learn the entire process completely.

It's as if the teacher told the student: "Hey, I'm able to generate this image in 50 steps, but I'm going to teach you how to achieve the same result in 8 steps". After the process, the trained model will not be able to generate the same images that would be generated in 32, 33, 34 steps... but therein lies the virtue.

Once the student model has learned to perform this task, he becomes the teacher and tells his next student: "Hey, I'm able to generate this image in 8 steps, but I'm going to teach you to do it in 4 steps".

And the process continues until it's no longer possible to transfer knowledge without incurring a great loss of quality, hence progressive.

In SDXL-Lightning, training has started with images in 128 steps and this process has been repeated until being able to generate decent results in a single step. Because of this, you will be able to experience how an SDXL-Lightning image in 4 steps can have more visual quality than an image generated with Stable Diffusion XL in 50 steps, since the SDXL-Lightning image would be trying to represent the image generated in 128 steps, not 50.

Adversarial distillation

The other technique used during training is called adversarial distillation.

Adversarial comes from generative adversarial networks (GAN). In this process, two neural networks compete in a kind of zero-sum game. One neural network (called generator) generates a data set (for example, an image) and tries to fool the other neural network (called discriminator), which is in charge of verifying the result. In SDXL-Lightning, the model representing the student is the generator.

To continue with the previous analogy, the discriminator model has an image generated by the teacher in 50 steps. The student gives images to the discriminator saying: "Hey, look, this image is from the teacher. He generated it in 50 steps".

The discriminator decides whether to believe him or not. He probably won't. But the student doesn't give up and tries again, doing a bit better this time. Oh wow, second failed attempt. And so, gradually, the student improves until he catches up with the teacher. There comes a point where the discriminator no longer knows how to distinguish the images and is fooled. He has accepted that the image has been generated by the teacher and the student wins the game.

SDXL Turbo also uses the adversarial distillation technique, but here we find one of the major differences. Turbo model uses the pretrained model DINOv2 as the discriminator. Working in pixel space, this model substantially increases the memory consumption and the time needed to perform training, which is one of the reasons why SDXL Turbo has been trained with 512x512 pixel images.

SDXL-Lightning, on the other hand, uses Stable Diffusion XL's own U-Net model. As this model works in latent space and not in pixel space, these limitations are reduced and training could be performed with images of 1024x1024 pixels, which significantly increases the quality of the result.

This change also introduces other improvements such as greater compatibility with control models (ControlNet) or LoRAs, as well as greater generalization capacity, something essential to generate images with greater variety or different styles.

Checkpoint comparison

Now that we know what knowledge distillation is and how we can transfer knowledge from a large model to a smaller one, let's compare how small the trained model can be. We will use the full model instead of the U-Net model to simplify the code.

The images have been generated with SDXL-Lightning in 8, 4, 2 and 1 step. In addition, the first image that serves as a reference has been generated with the Stable Diffusion XL base model in 50 steps.

| Inference time | |

|---|---|

| Base (50 steps) | 13.2s |

| 8 steps | 1.8s -86.36% |

| 4 steps | 1.2s -90.91% |

| 2 steps | 1s -92.42% |

| 1 step | 0.8s -93.94% |

In photorealistic images, SDXL-Lightning is able to deliver very high quality in any number of steps. It's amazing. In terms of prompt fidelity, you get quite higher accuracy on the 4 and 8 step models. Text... it tries, I haven't seen any difference depending on the number of steps, sometimes it gets it right and sometimes it doesn't. In short, generating images in 1 or 2 steps has its use cases, but if we do not want to lose quality I give the prize to the 4 steps model, it seems superior to me in all images.

Regarding inference time... what can be said about a 90% reduction? It's ridiculous.

Memory usage is the same in both models.

LoRA

LCM-LoRA was a pioneering method in demonstrating that knowledge distillation can also be trained as a LoRA module. Although the quality of the result is not optimal, a series of advantages are achieved: the model takes up 20 times less space and, more importantly, the LoRA module can be used with any model other than the base model.

Let's compare images generated with the LoRA module in 8, 4 and 2 steps, adding two images for reference: Stable Diffusion XL (50 steps) and, the one seen above, SDXL-Lightning (4 steps).

| Inference time | |

|---|---|

| Base (50 steps) | 13.2s |

| Lightning (4 steps) | 1.2s -90.91% |

| LoRA 8 steps | 1.8s -86.36% |

| LoRA 4 steps | 1.2s -90.91% |

| LoRA 2 steps | 1s -92.42% |

Here it's more or less the same. LoRA in 2 steps suffers from the same problems as using the full model, but in the case of the module in 4 and 8 steps, it seems to have almost the same virtues in addition to the extra advantages provided by this method.

There is no difference in inference time and memory usage between using the full model or the LoRA module.

Is it better to use the full model (or U-Net) or the LoRA module? I couldn't answer, it seems that the full model provides a little more detail, but the story doesn't end here. As we saw previously, the main advantage of using LoRA is its compatibility with any other model.

Juggernaut XL

Let's perform another test, this time based on images generated with the acclaimed Juggernaut XL model and comparing them with images generated with the Stable Diffusion XL base model (the ones we just saw).

This is something else, huh? Even using LoRA in 2 steps the result is spectacular. After this test I can say without mistake that the LoRA module, thanks to its flexibility, can produce higher visual quality with custom models (especially in photorealistic images).

vs SDXL Turbo

SDXL Turbo stands out as a model capable of performing inference in just 1 single step, delivering enough quality for some use cases.

Let's compare a few images of SDXL Turbo and SDXL-Lightning using the full model, with generations in 4, 2 and 1 step.

Will SDXL Turbo still be up to the task?

| Inference time | Memory | |

|---|---|---|

| Lightning 4 steps | 1.2s | 11.24 GB |

| Turbo 4 steps | 0.4s | 8.22 GB |

| Lightning 2 steps | 1s | 11.24 GB |

| Turbo 2 steps | 0.3s | 8.22 GB |

| Lightning 1 step | 0.8s | 11.24 GB |

| Turbo 1 step | 0.2s | 8.22 GB |

Wow, it hurt even me. We must remember that the development of this technology is advancing at a dizzying pace, what 2 months ago was state of the art, has now practically become obsolete. Of course we do not have to be harsh with SDXL Turbo, thanks to the innovation it brought we now have SDXL-Lightning.

SDXL Turbo is capable of generating decent images in 2 steps and seems to work better when generating text, but it is clearly not superior.

Where it is necessary to break a point in favor of the Turbo model is in inference time and memory usage. This leaves SDXL Turbo room for some use cases.

vs LCM-LoRA

LCM-LoRA is the result of converting into a LoRA module one of the best ideas of recent times, the Latent Consistency Models.

Although LCM-LoRA can also be integrated with other custom models, we'll compare both LoRA modules working on the Stable Diffusion XL base model.

| Inference time | Memory | |

|---|---|---|

| LoRA 8 steps | 1.8s | 11.24 GB |

| LCM-LoRA 8 steps | 2.2s | 11.65 GB |

| LoRA 4 steps | 1.2s | 11.24 GB |

| LCM-LoRA 4 steps | 1.5s | 11.65 GB |

| LoRA 2 steps | 1s | 11.24 GB |

| LCM-LoRA 2 steps | 1.1s | 11.65 GB |

In a few steps LCM-LoRA has nothing to do, it takes at least 8 steps to start seeing some quality in the result. At that point, the speed improvement begins to be questionable.

There is not much more to say. The quality is way behind, it's slower and also consumes more memory. LCM-LoRA... thanks for your contribution!

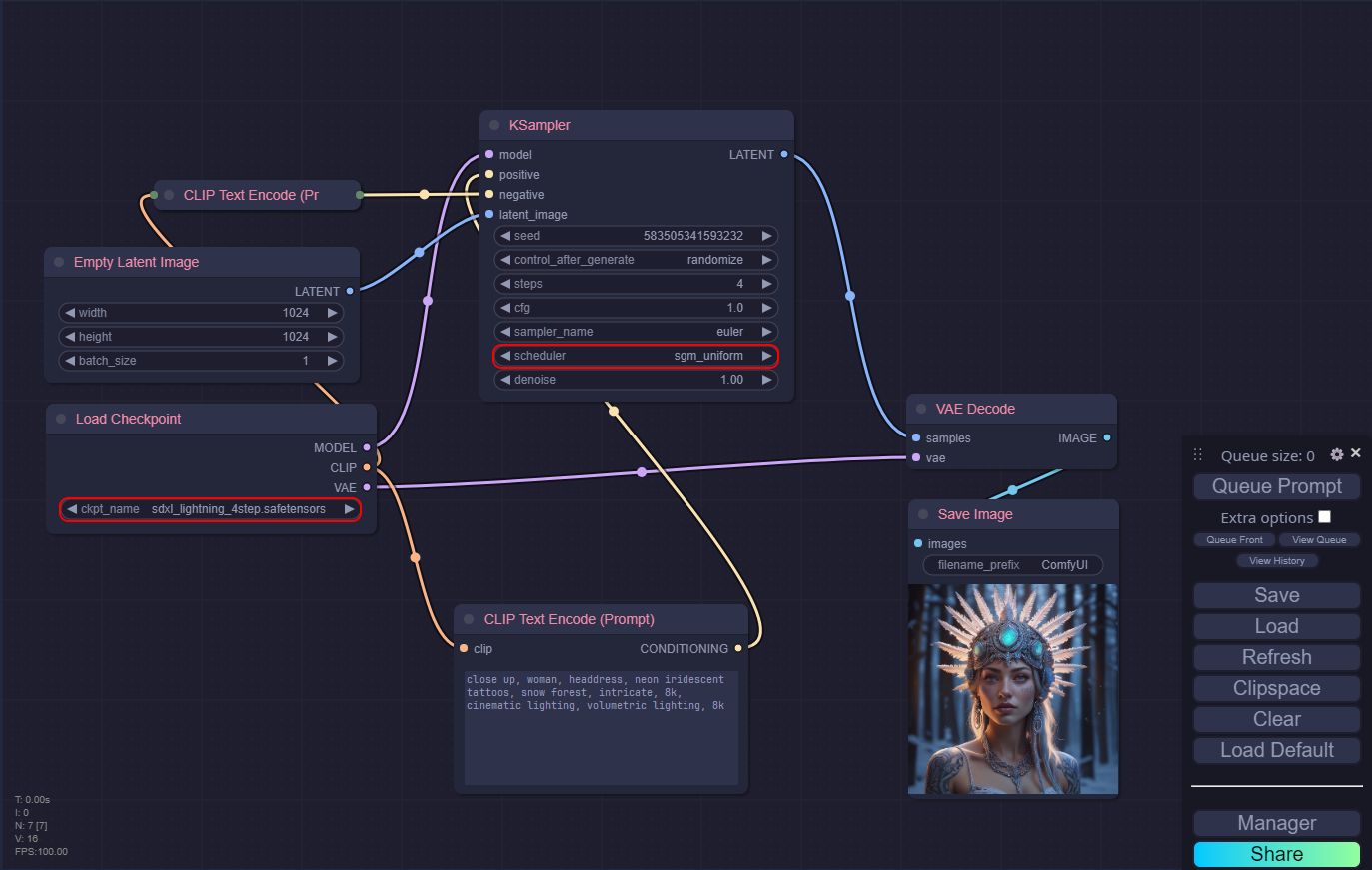

ComfyUI

Using SDXL-Lightning is very easy, the only thing to keep in mind is to use the full model (not the U-Net) and use the sampler in sgm_uniform mode (within the KSampler node).

Conclusion

In this article we have seen how this new method works, which achieves impressive images in just one step.

We have made all kinds of comparisons to find out what number of steps is the most recommended, what advantages the LoRA module provides and we have also compared it to its predecessors.

The conclusion is quite firm, this is a great job by this team of researchers who, thanks to their training method, have managed to publish a very versatile and powerful model.

If I say that it has become my favorite I would not be lying.

You can support me so that I can dedicate even more time to writing articles and have resources to create new projects. Thank you!